The High-Level Expert Group on Artificial Intelligence (HLEG) have produced a new tool for the assessment of trustworthiness of AI systems. The Assessment List for Trustworthy Artificial Intelligence (ALTAI) is a tool to assess whether AI systems at all stages of their development and deployment life cycles comply with seven requirements of Trustworthy AI.

The HLEG was set up by the European Commission to support the European Strategy on AI. Created in June 2018, HLEG produce recommendations related to the development of EU policy, together with recommendations related to the ethical, social and legal issues of Artificial Intelligence. HLEG is comprised of over 50 experts, drawn from industry, academia and civil society. It is also the steering group for the European AI Alliance; essentially a forum that provides feedback to the HLEG and more widely contributes to the debate on AI within Europe.

The ALTAI Tool

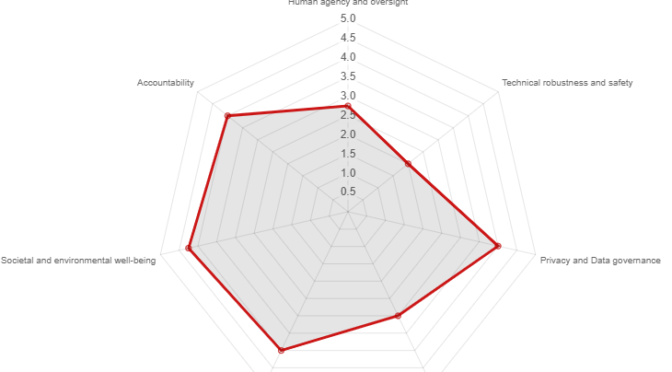

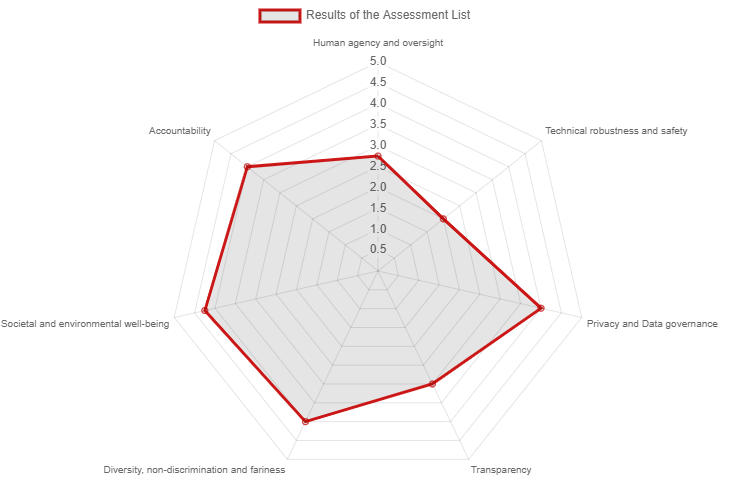

Based on seven key requirements, the new ALTAI tool is a semi-automated questionnaire allowing you to assess trustworthiness of your AI system. It does rely on honest answers to the questions of course! The seven key requirements are identified as:

-

- Human Agency and Oversight.

- Technical Robustness and Safety.

- Privacy and Data Governance.

- Transparency.

- Diversity, Non-discrimination and Fairness.

- Societal and Environmental Well-being.

- Accountability.

Using the system is relatively straightforward. First, you must create an account and log in to the ALTAI web site. Then choose 'My ALTAIs'. The system allows you to complete, store and update multiple ALTAI questionnaires. Once you have completed the questionnaire, the system produces a graphical representation of your 'trustworthiness' (the spider graph above), together with a set of specific recommendations based on your answers. Note that the ALTAI website is a prototype of an interactive version of the Assessment List for Trustworthy AI. You should not use personal information or intellectual property while using the website.

I found the system easy to use, but would have liked to see a graph/tree of how the question boxes are arranged, and some clearer explanation of the red and blue outlines - in short, more system transparency of the assessment system!

I also have some reservations related to the independence of the person completing the assessment, and the possibility of bias when someone closely involved in an AI development project is tasked with assessing it. This could be improved by using an independent, suitably qualified and competent auditor.

It's very encouraging to see the emergence of these kinds of audit systems specifically targeted towards the deployment of AI technologies. Hopefully as these systems develop they will align with the international standards that are currently being developed - for example the IEEE Ethically Aligned Design standards, such as P7001 for Transparency of Autonomous Systems.