Back in the late 1990's, a small group of people realised that storing the year in a two digit format might cause some problems at midnight 31st December 1999. As the date flipped over from 31-12-99 to the very odd looking 01-01-00, would critical IT systems fail? After all, the date has never gone 'backwards'. … Continue Reading ››

Category Archives: Ethics

Humans are more than biological creatures

Earlier this month I attended one of the regular BRLSI philosophy talks. Andreas Wasmuht prepared an excellent introduction to the work of Martin Heidegger, specifically his work Being and Time (first published in 1927). This post is not specifically about that work, but it got me thinking again about how much more … Continue Reading ››

Taking AI Deployment Seriously

I'm just back from Copenhagen where we ran a roundtable on AI assurance, asking how we can best use tools to reduce risk and address compliance with upcoming regulations, particularly the European AI Act. The speaker details are in the link, and there will be a full video too. We had about … Continue Reading ››

IET Responsible AI Webinar

Delighted to be part of the programme @IETevents new webinar series on Responsible AI, exploring cutting-edge technology and future applications over seven sessions in November and December. Booking is open now: http://ow.ly/Kcdg30rWpTu

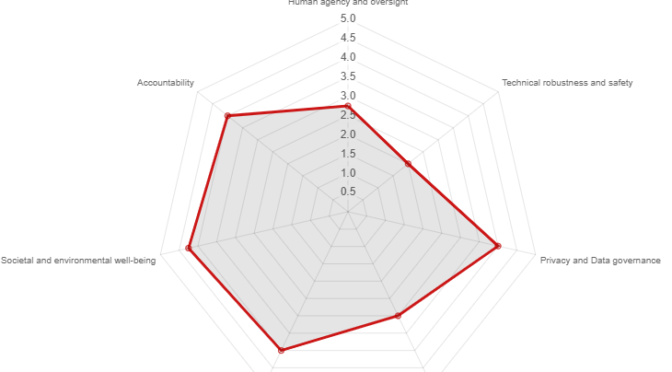

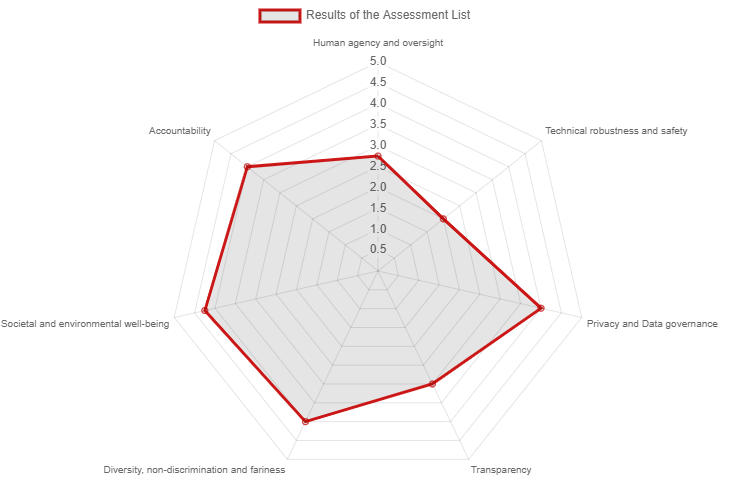

ALTAI – A new assessment tool from the High-Level Expert Group on Artificial Intelligence (HLEG)

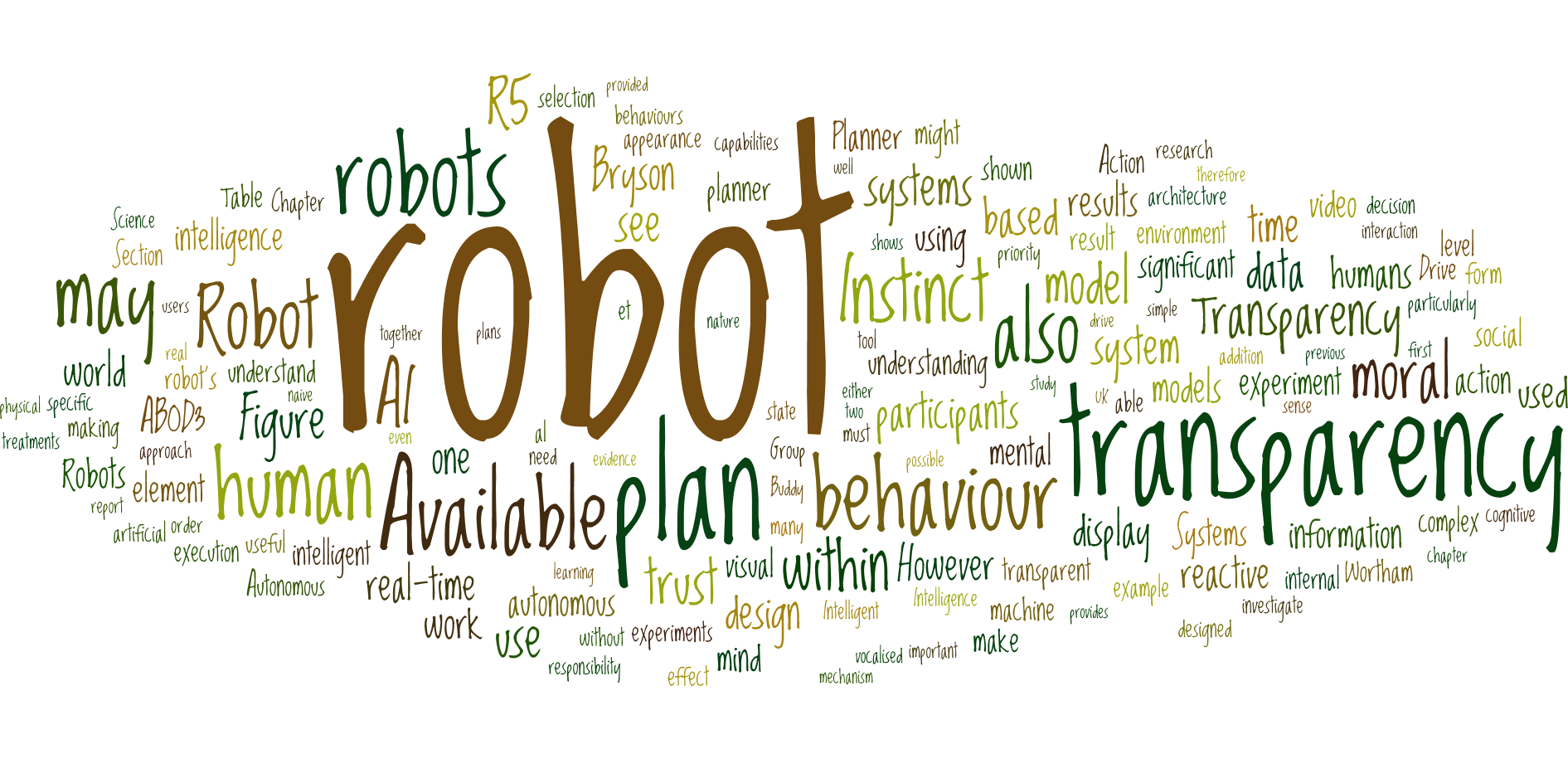

New Book: Transparency for Robots and Autonomous Systems

Battle of Ideas 2019 – Can you build a human?

I was delighted to be part of the panel for 'From Robots to AI: Can You Build a Human?' at the Barbican Centre in London a couple of weeks ago. Chaired by Timandra Harkness, along with … Continue Reading ››

I was delighted to be part of the panel for 'From Robots to AI: Can You Build a Human?' at the Barbican Centre in London a couple of weeks ago. Chaired by Timandra Harkness, along with … Continue Reading ››

AmonI UPDATE

Today I spent a couple of hours in hackathon mode with fellow members of the AmonI (Artificial Models of Natural Intelligence) research group at Bath . We decided it was time to bring the AmonI web pages up to date, so that the web site properly reflects our previous and current … Continue Reading ››

Bath TEDx – Will robots be our new best friends?

I'm looking forward to speaking at this TEDx event at the end of this month. The speaker lineup is diverse, and I'm sure we will all contribute in different ways to 'Light Up the Future' - the theme of these TEDx talks. I'll be speaking about how AI and robotics, in … Continue Reading ››

Why AI may fail to help us with solutions to our problems

Continue Reading ››

Continue Reading ››